Sensing Micro-Motion Human Patterns using Multimodal mmRadar and Video Signal for Affective and Psychological Intelligence

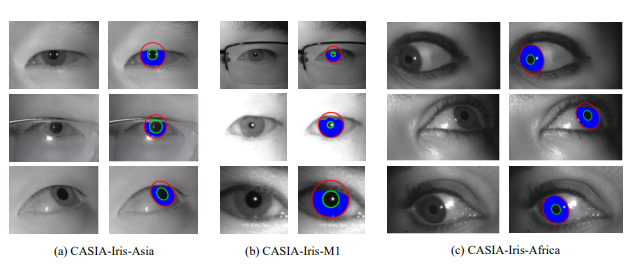

Iris Segmentation and Localization: Samples

Iris Segmentation and Localization: Samples

Abstract

Affective and psychological perception are pivotal in human-machine interaction and essential domains within artificial intelligence. Existing physiological signal-based affective and psychological datasets primarily rely on contact-based sensors, potentially introducing extraneous affectives during the measurement process. Consequently, creating accurate non-contact affective and psychological perception datasets is crucial for overcoming these limitations and advancing affective intelligence. In this paper, we introduce the Remote Multimodal Affective and Psychological (ReMAP) dataset, for the first time, apply head micro-tremor (HMT) signals for affective and psychological perception. ReMAP features 68 participants and comprises two sub-datasets. The stimuli videos utilized for affective perception undergo rigorous screening to ensure the efficacy and universality of affective elicitation. Additionally, we propose a novel remote affective and psychological perception framework, leveraging multimodal complementarity and interrelationships to enhance affective and psychological perception capabilities. Extensive experiments demonstrate HMT as a “small yet powerful” physiological signal in psychological perception. Our method outperforms existing state-of-the-art approaches in remote affective recognition and psychological perception. The ReMAP dataset is publicly accessible at https://remap-dataset.github.io/ReMAP.